<< The Algorithm: Simplified

Philosophy

The algorithm that we have implemented is derived from the Elo rating system. The Elo system was originally developed to provide a relative ranking system for two-player games such as chess, but it has been generalized and applied to many other sports including college football, basketball and Major League Baseball.

The basic assumption of the Elo system is that the performance of any individual (or team) is a normally distributed (Gaussian) random variable. And, while any individual performance might differ significantly from one game to the next, the mean value of these performances would be relatively constant. Thus, the true skill would be best represented by that mean value. Further statistical studies have shown that rather than using the normal distribution, individual performances are better represented by the closely related logistic distribution. As such, we have chosen to use the logistic distribution for the FTS algorithm.

In creating the power ratings, we had a few arbitrary choices that relate to how the power ratings would be presented. We chose the range such that most teams would have a power rating between 500 – 1000. While there is nothing inherently restricting the possible values of the power ratings from going higher or lower, the system feeds back on itself in such a way that it becomes increasingly difficult for a team to stray much beyond this range.

The Scoring Metric

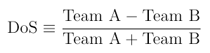

It is necessary to provide a measurable way of judging the outcome of a bout. Simply using a win or loss does not give enough fidelity to accurately gauge the strength of the performance. We instinctively use the score, more specifically the difference in the score, to measure a team’s strength (i.e. if Team A beat Team B by 250 points while Team C only beat Team B by 100 points, then Team A must be stronger than Team C). However, this overly rewards teams with a high-output offense while negatively valuing teams with a good defense. Consider, for example, Team A beats Team B by the score of 320 to 205 while Team C beats Team B by the score of 95 to 3. By score difference alone, Team A would be considered the stronger team. However, it could well be argued that Team C had more dominant control of their game, scoring at will and keeping Team B from scoring points. To account for this, we use a Difference-over-Sum (DoS) method to provide a normalized ratio of the scores that is independent of the overall scoring:

The DoS spans the range between +1 (a dominant win by Team A) and -1 (a dominant win by Team B), with 0 representing a tie between the two. In the previous example, the DoS for Teams A and B would be 0.22 while the DoS for Teams C and B would be a much more dominant 0.94. Throughout our testing, we have found that the DoS is a very reliable gauge of a team’s strength and we have adopted it as the primary metric through which predictions and bout results are compared.

The Prediction Algorithm

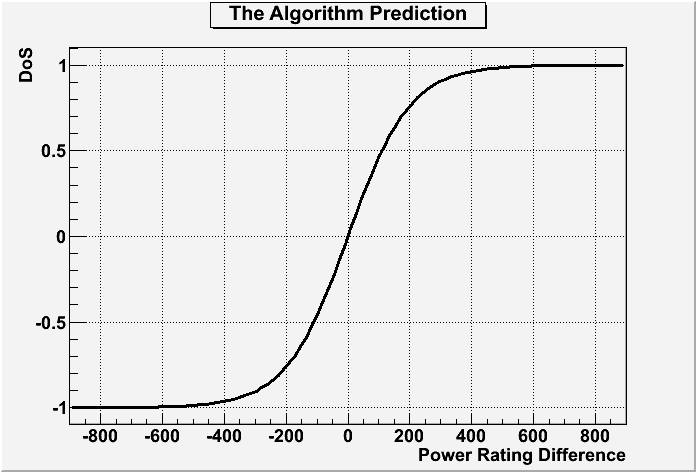

The standard Logistic Cumulative Distribution Function (CDF) provides a value between zero and 1 that is symmetric around zero. To match the values of the DoS, which range from -1 to +1, and to allow flexibility in the implementation of the power rating values, we have generalized the Logistic CDF as follows:

where RB and RA refer to the power ratings of Teams B and A respectively and ![]() provides a mechanism to adjust for any biases which may exist between the two teams. The CDF thus provides a prediction of the DoS between any two teams based on their relative rankings as shown in the graph below.

provides a mechanism to adjust for any biases which may exist between the two teams. The CDF thus provides a prediction of the DoS between any two teams based on their relative rankings as shown in the graph below.

Adjusting the Ratings

After a bout, the DoS prediction from the PDF function is compared to the actual result. The difference between the two is then multiplied by a scaling factor to adjust the rankings of the two teams. As a result, one team will go up and the other will go down. It is important to realize that since we base the ratings on the comparison between the prediction and the result, a team can win but have their rating go down if they did not win as strongly as was expected. Similarly, a team that loses by less than expected can see its rating rise. The magnitude of the change in ratings will be identical for the two teams.

The ratings are adjusted equally after every bout and the new rating is used for the next prediction. As a result, the rating is a compilation of the entire history of a team. However, we’ve left the scaling factor large enough that the rating can move rather rapidly in the event of a major change in a team’s skill or performance level – usually adjusting to a new power rating range within only two or three bouts.

Home/Away Bias

One of the interesting things we found during our analysis of past bouts is that there is a small but measurable bias in favor of the home team. This bias varies slightly depending on whether the bouts were played in a normal hosted match or if they were played in ranked or invitational tournaments. Under most normally hosted circumstances the home teams will have a DoS of about 0.06 better than expectations. This was found to be independent of team, region or relative strength. As a result, we were able to adjust our prediction algorithm using the ![]() parameter shown in the CDF equation. By taking the home/away bias into account, we were able to improve our prediction accuracy significantly.

parameter shown in the CDF equation. By taking the home/away bias into account, we were able to improve our prediction accuracy significantly.